Recently, I had the pleasure of representing CSIRO at the AAAI conference in Washington DC. Among a large gathering of people with divergent ideas and attitudes about the state and future of Artificial Intelligence, I gained an observation worth sharing:

- Academics are impressed by the expressiveness of neural-network systems, notably including transformer-based large language models (LLMs) - such as ChatGPT.

- Yet, research on robust and efficient classical reasoning algorithms is also continuing.

- Thus, there is anticipation about the future interplay of these systems - a field called 'NeuroSymbolic' AI.

This contrast and interplay between Symbolic and Neural AI, can also be witnessed and anticipated in Bioinformatics.

1. Deep learning neural network models continue to amaze academics

Due to the remarkable successes of LLMs, the conference was attended by academics considering a range of new questions.

For instance: how much could (or should) the English language be used as a medium for internal representation in general reasoning and knowledge tasks? Such as 'commonsense' encoding problems in robotics?

If it was not for the current excitement about recent successes in AI, these kinds of questions would seem to be much less likely yesteryear.

During the conference, the enthusiasm surrounding modern neural network applications was evident. From controlling nuclear fusion to weather modelling, from dynamic textual and artistic generation to vocal synthesis and music generation, the potential for such AI advancements to disrupt society was palpable. I was most impressed by the keynote speakers seeking to incorprorate new insights from dynamics in physics into AI science.

This excitement over the advances of neural networks extends to Bioinformatics too, as one of the most incredible successes of neural network AI has been demonstrated in relation to protein folding.

With this excitement it is easy to be carried way with optimism about the potential of Neural Networks. However we need to recognise that Neural Networks won't be able to solve everything, there are limitations and constraints on the type of problems they can reasonably be applied to.

2. Deep learning neural networks are unlikely to replace classical symbolic algorithms

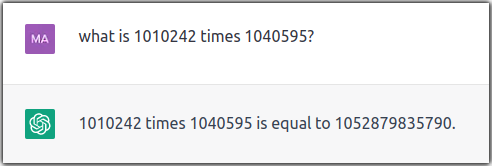

While language models like ChatGPT have shown incredible success in certain areas, there are still limitations to their capabilities. Consider the illustrative task of multiplying numbers together:

In this context your pocket calculator returns a more accurate answer and likely does so faster and cheaper. The reason a calculator succeeds in this case is simple - specifically that it is a for-purpose symbol-processing machine primed with an efficient classical algorithm.

By contrast LMMs are trained by fitting over a dataset - and training one over a combinatorially large set of multiplications is comparably inefficient.

For the purpose of attaining precise and efficient output, our modern world is currently built upon a range of very powerful (and well developed) classical algorithms, for instance:

- Ask google how to travel to your nearest city,

and it will use the A* algorithm. - Tell your phone to Store an image as a JPEG,

and it will use the fast Fourier transform. - Type your password into your computer,

and it will (hopefully) use AES.

Additionally, Symbolic and classical algorithms still have foundational place in Bioinformatics, such as for pairwise and multiple sequence matching (including historical Needleman-Wunsch, BLAST and Clustal).

When we consider Neural Networks from the perspective of being just one of many algorithms, we may be given to think more pessimistically about the potential of Neural Networks. However there is scope and potential for developing new algorithms for complex problems.

3. A bridge between Neural and Symbolic, is NeuroSymbolic

The term "NeuroSymbolic AI" represents AI at the interface between Neural (as referring to neural networks) and Symbolic (as referring to classical reasoning).

The promise of combining the expressiveness of Neural Networks with the exactness of symbolic reasoning algorithms to create AI more powerful than either, was the topic of the most interesting workshops and keynote presentations at AAAI-23.

Consider that some of the most successful applications of neural networks are already hybridised with classical logic - for instance:

- That the program that defeated the world's best GO players (Alpha-Go) was not just a deep Neural Network but also used a Monte-Carlo tree search algorithm that utilised deep neural networks for state value functions.

- That Language models like ChatGPT aren't merely deep neural networks; they include structures, such as the multi-head attention mechanism designed specifically for backpropagation to select critical features.

So, instead of thinking optimistically or pessimistically, perhaps it is better to think pragmatically, about how we can leverage and hybridise Neural Networks where needed.

Consider:

How can overly rigid algorithmic elements in classical routines, be made into differentiable expressions for gradient descent?

Or alternatively:

How can classical elements be used to inform the gradient descent for adjoined Neural/differentiable elements?

Trivia challenge

In considering NeuroSymbolic AI, one of the following sequences is algorithmically generated to be similar to the others.

Can you tell which one it is?

1: TGCAACGTTCCCTTTCAATAGAGCCCCCTGAATCTGCGCCGCTGAGTGATGGAACCAGACTAGCCAAACGGTAGAAAATC 2: TGGACCCAGCTCGTTCAATACAGACAGGTGAATCTGATCCTCAGAGCTATCGAACTGAGCTACCCAATCGCTTGAGAAGC 3: TGTCACTTTCCCATTCAATATAGTACCCTGAATATGCGCCGCTGAGTGACTGACACAGACTAGCTAGAGGCGAGAAAATC 4: CGCAACGGTATCATTTTACAGCCCCTCCTCAATCCGTGCCGCGCAGTGATGGATCTAAACAAGCAGAACGAGAGAAAAGC 5: TGCAACGTCCCAATTCAGGGGGAGCCTTTCATCCCGAGCCGCTGAGTGGCGAAACCAGACTAGCCAAACACCAGGCAAAT 6: TGCAACGCTCTCATACAATAAAGTTCCCCGAATCTGCGCCGCTGAGTGTTGGAATCAGACTAACCATACGGTAGACGATC 7: TGCAACGTTCCGTCTCACTCGAACCCCCCGGCTCTCAGAAACAAAGAGAAGGATCCAAACTCGGTAAACAGAAGAGAATC 8: TGCAGCGTTCATTATCAATTCCGCCCCCTGAATGTGCGCCGCTTAGTGAGGCAACCAGACTTACTAAACCGGAGAAAATC 9: CGCAATTCTTCCTCTCAGTAGAGCCCCCTGAATCTGCGGCGCTCATCGAGGGAACAAAAAAAGCCAAATGCAAGTATATC 10: TCCGACGTAATTTTAGACTAGAGTGTCTTTACTCTCGGACGCTGAGGGGTGGTGTCCGATCAGCGACACGGTACGAAATA 11: TGCATTGTTCCTTATTAGTAGCGCCTCCTGAAACGGCGCGTTAGATTGATCGTACTAGACTAGCTAAACGGTAGAAAATC 12: TACAACGTGTCCTTTCAATAGAGCCCCCTGATTCTCTCCCGAGGGGGCGTGGAAACACACTAGCCAAACGGGAGAAAATC 13: TGGAACGGACACTGTCGTTCGCCCCCCCTTTAGCGGCACTGCTGAGAGACCGAACCAGAATAGCGCAGCGGTAGAAAATC 14: TGCTACGTACTCTTTCAATCGAACACCCTGAATATGCGTAGCAGAGTGATGAAACTACCGTATCCCAGCGCTGGAAACTC 15: TGCATTGATCCCTTTCTACAAAGGCCCCTGGAGCTGCGCTAGTGTATGATGTAACCAGTCTAGGTAAACGGTACTAAACC 16: TCCAGCGTTTCCTTGCAATAGCGCCCCCTAAATCTGAACTGCGAAGTGCTGGGGCGAGAGTATCCGAACAAGAGGCATGC 17: CCTTGCGTCACATTTCGTCACAGCCCCCTGATTCTGCGCCGGTGAATGAAGGAAACACAAGAGAGTCACTGTCGACTCCC 18: TGCGGCCCTCCGGTCTTATAGAACACAGTGTACCTGCGACCCTGAGCCACAGAACCTGGAAAACCTAACGGTTGAATATA 19: TGCAATGTTCCATTTCAATAGATCGCCCTGCTTATGGGGCGCTGAGTCCCGGGAGGATACTAGCCAGTCCGGTGAAAACC 20: TGCCGCGACGGCTCTCAAGAGGGCCCCCCCTCCCTTAGGGGCGGTGAAACGAAACTTGACTTGCCAGATGGTGGAACAGC

References

Subscribe to Transformational Bioinformatics

Stay up-to-date with our progress