Are you confused by buzzwords like Artificial Intelligence (AI) and DeepLearning? Have you ever wondered if DeepLearning is better than Machine Learning (ML) or why AI and ML are used interchangeably?

Keep reading to decode these tech-jargons.

Why is it so confusing?

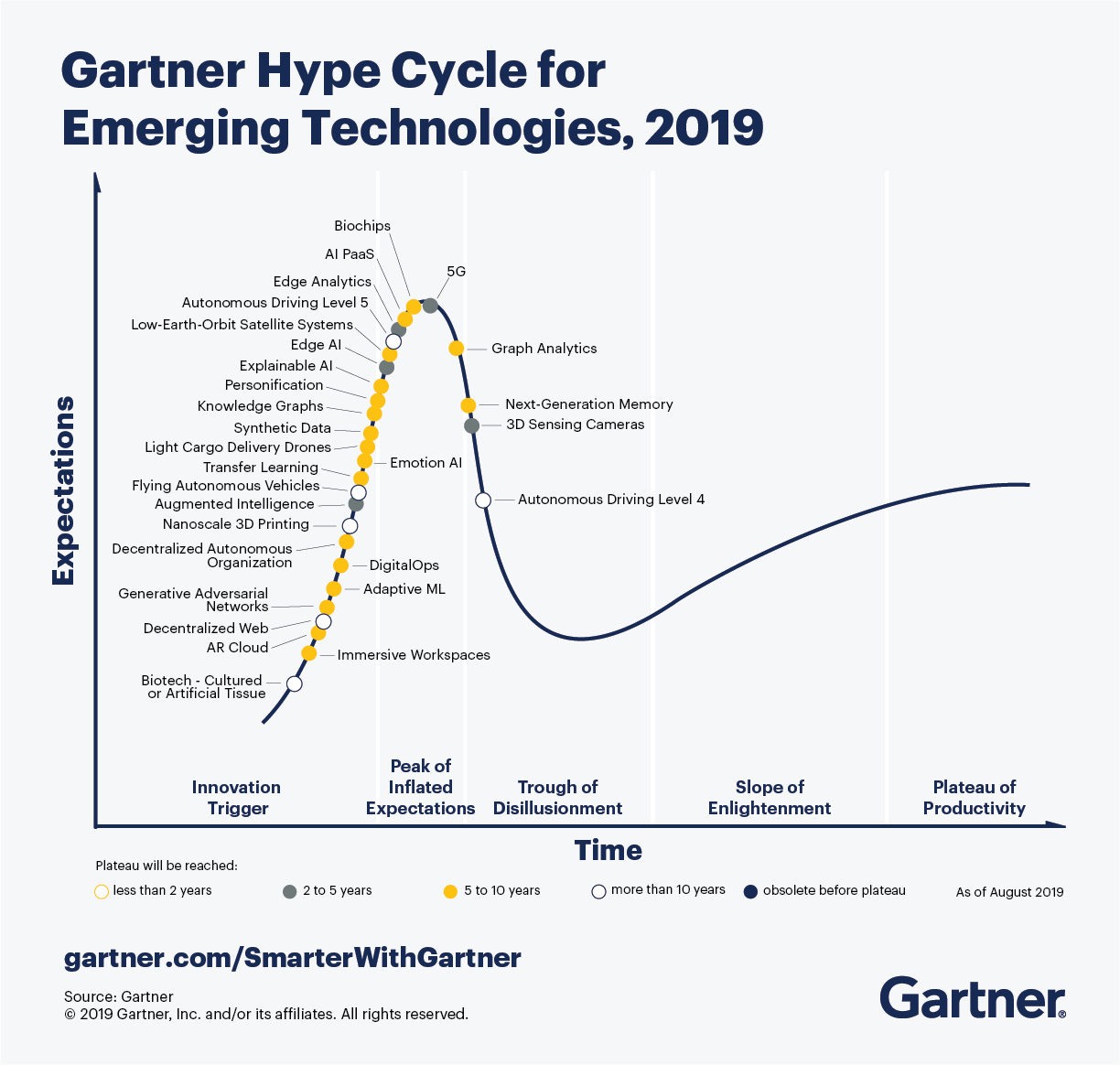

As technologies rise in the Hype-cycle towards their "Peak of Inflated Expectations", they tend to loose their precise technical definitions. This is because non-partitioners introduce a more colloquial interpretation, which can confuse the space. AI is certainly one such example, reaching its peak on the Gartner Hype Cycle, it has been interpreted in a variety of different ways, from meaning mere automation to including Arnold Schwarzenegger's Terminator.

So what is the difference?

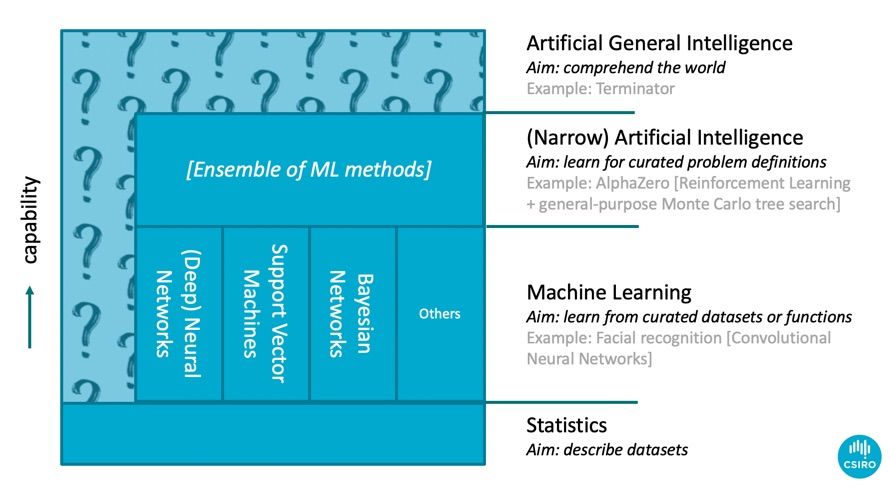

A widely adopted categorisation is along the spectrum of capacity, with Artificial General Intelligence at the very top, followed by Artificial Intelligence and Machine Learning.

Artificial General Intelligence (AGI) currently only exist in science fiction. Think of machines like Data from Star Trek, able to independently understand the world and react meaningfully to new situations. Some add consciousness [1] to the list of requirements, which inevitably leads to concerns about technological singularity; the hypothetical point in the future when machine intelligence rivals that of humans resulting in potential uncontrollable and irreversible outcomes for humankind.

AGI was first theorised by Alan Turing in 1950 [2] with current efforts combining approaches from computer-science and neuroscience [3]. However, a 2018 survey of leading AI researchers (n=18) put the earliest date for a 50% chance of AGI existing at 2099 — 80 years from now [4]; meaning there are likely fundamental technological pillars missing towards achieving AGI (if possible at all).

Artificial Intelligence

AI, on the other hand has been achieved by the combination of existing technologies. This is because the aim of AI can be defined much narrower as a machine capable of learning from human curated problem definitions.

The frequently used example is AlphaZero [5], a predecessor of AlphaGo able to learn autonomously how to play the game of Go, chess, and shogi (japanese version of chess) without prior training or external guidance. The research team has achieved this using Reinforcement Learning and general-purpose Monte Carlo tree search. This enabled the machine to learn from experience by playing the game against itself millions of times. This is considered a seminal milestone as the machine was able to master multiple games, with Go considered especially hard for AIs due to the vastness of the search space ... a number greater than there are atoms in the universe [6].

While AlphaZero was capable of beating the human Go champion 100 games to 0 and was able to do so within 3 days starting from scratch, it is not capable of applying this knowledge to other domains. While capable of learning rapidly, the machine always requires the problem to be precisely scoped by a human prior to learning.

Machine learning

ML comprises all algorithm that form the technological building-blocks enabling AI as well as a wide range of application cases such as face recognition [7] or guiding gene editing approaches [8].

ML aims to learn autonomously from curated data with our without labels attached to each data sample (supervised, unsupervised) or precisely defined reward functions (reinforcement learning). This discipline has been active since 1959, having resulted in a plethora of different approaches. Arguably, it has received a substantial boost in relevance due to the recent increase in data volumes, having coined the phrase "date is the new oil" [9].

Deep Learning

Deep Learning (DL) or Deep Neural Networks are but one subset of ML algorithms, alongside support vector machines (SVM), Random Forests (RF), or reinforcement learning algorithms, to name a few. Originating from Artificial Neural Networks, which aim to mimic circuitry in the brain, DL architectures have many more layers of "neurons" allowing for specialised functionality to arise within the model. The sheer compute power to propagate information through the vastness and complexity of resulting networks was only recently made possible by the increase in compute power and an improvement to the underlying algorithms. Specifically Convolutional Neural Networks CNN have found an ideal use case in image recognition tasks such as face recognition [5], mimicking the biological visual cortex.

Another architecture type of DL are Generative Adversarial Networks (GANs) [10] which consists of two nets, pitting against one another. GAN, for the first time, have conquered a skillset thought to be unique to humans: creative arts. GAN are able to generate new music, paintings, and realistic faces at a quality equivalent to human artists. However, GANs need a set of examples to learn an artistic style, to then be able to create original content in that style. Coming back to the requirement of a well defined dataset and the scope-limitation to a specific area.

ML is not the poor cousin of AI

How far away are we from fully autonomous systems? Dr Bauer says ML isn’t the poor cousin of AI. It’s about whether a certain technology answers the questions you’re looking at. #ai #D61live

— Data61 (@Data61news) October 3, 2019

As outlined on the panel about "AI for environmental and social good" that I participated in at the recent D61+LIVE conference, AI is not always better than ML; neither are Deep Neural Networks (CNN or GAN) superior over other ML approaches.

In fact, the "no free lunch" (NFL) theorem postulates that there is unlikely one algorithm that can be demonstrated to be superior for all problems. Accordingly, the most challenging tasks for ML/AL researchers to master is the choice of the right algorithm for the problem. Recent improvement in algorithm accessibility, most notably through efforts by the public cloud providers, applying ML techniques has never been easier. However, like any other science discipline, it requires years of practice to apply techniques skill-fully and avoid pitfalls.

So, with more than 10 years experience in Machine Learning, we are excited to apply our expertise to your problem - let's find the right ML approach and work on health care challenges together.